Hand Proximate User Interfaces

Novel UI for in-situ mixed reality interactions on and around the hand.

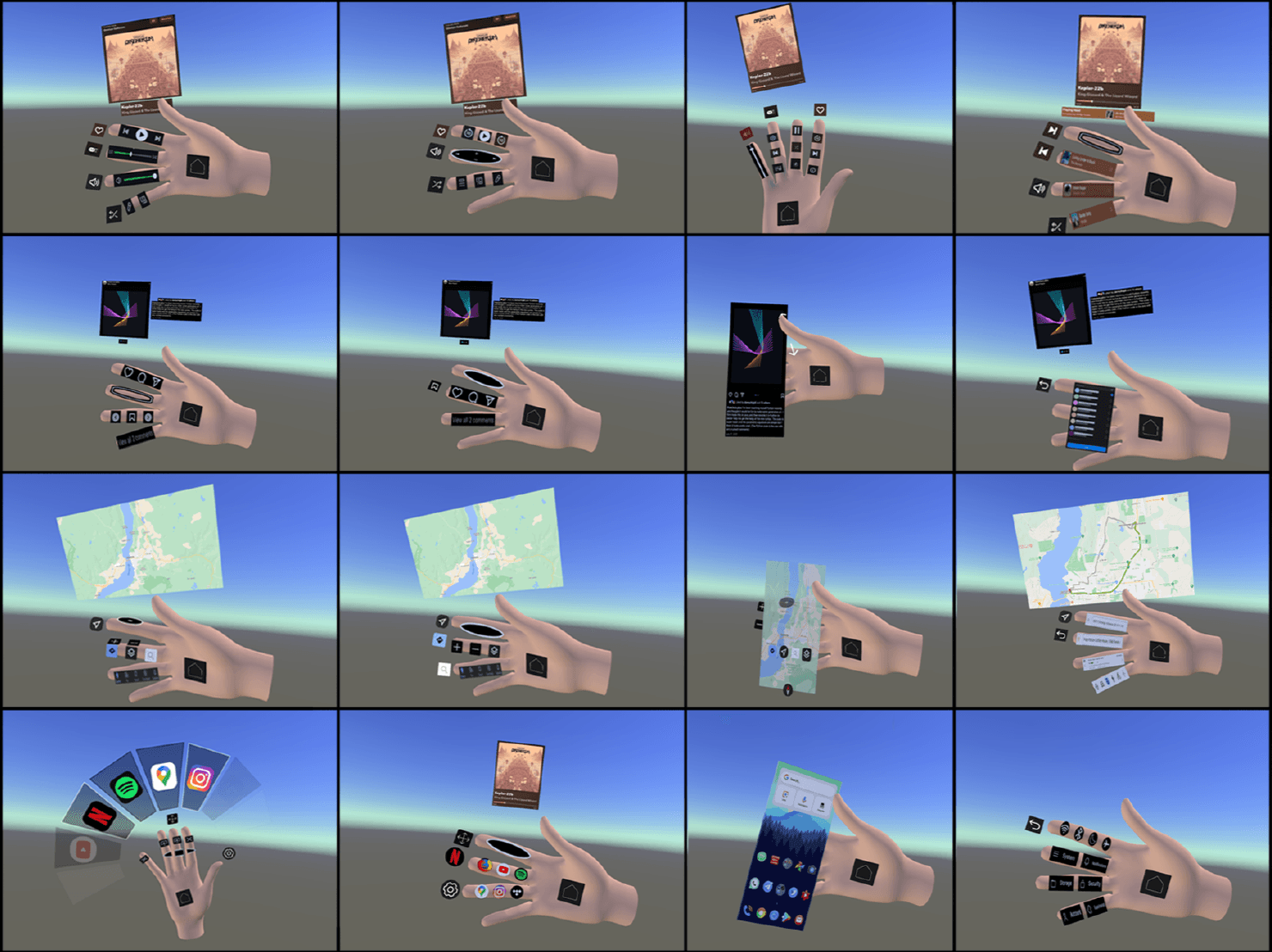

All implementations as shown in publication.

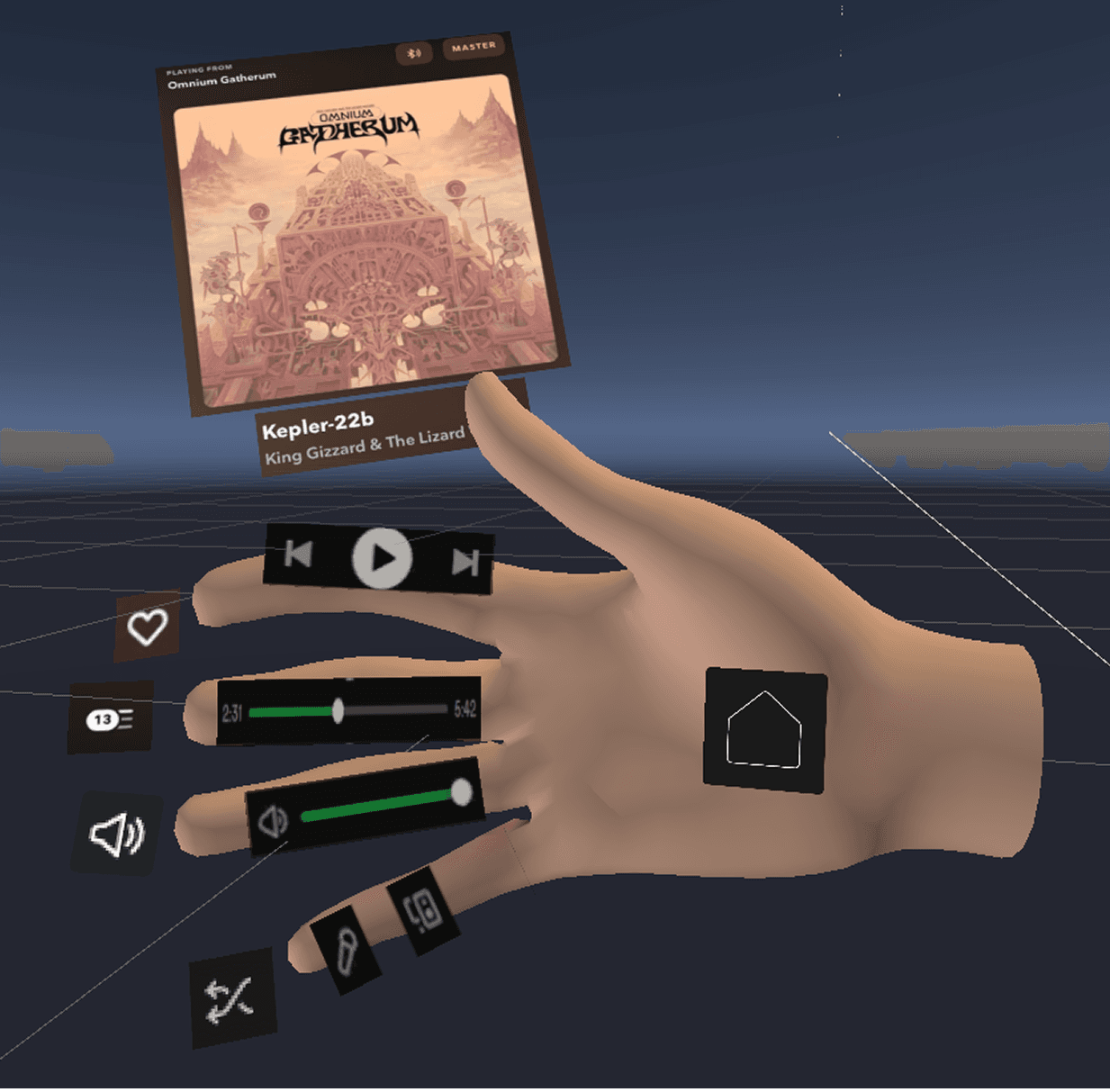

HPUI media player implementation.

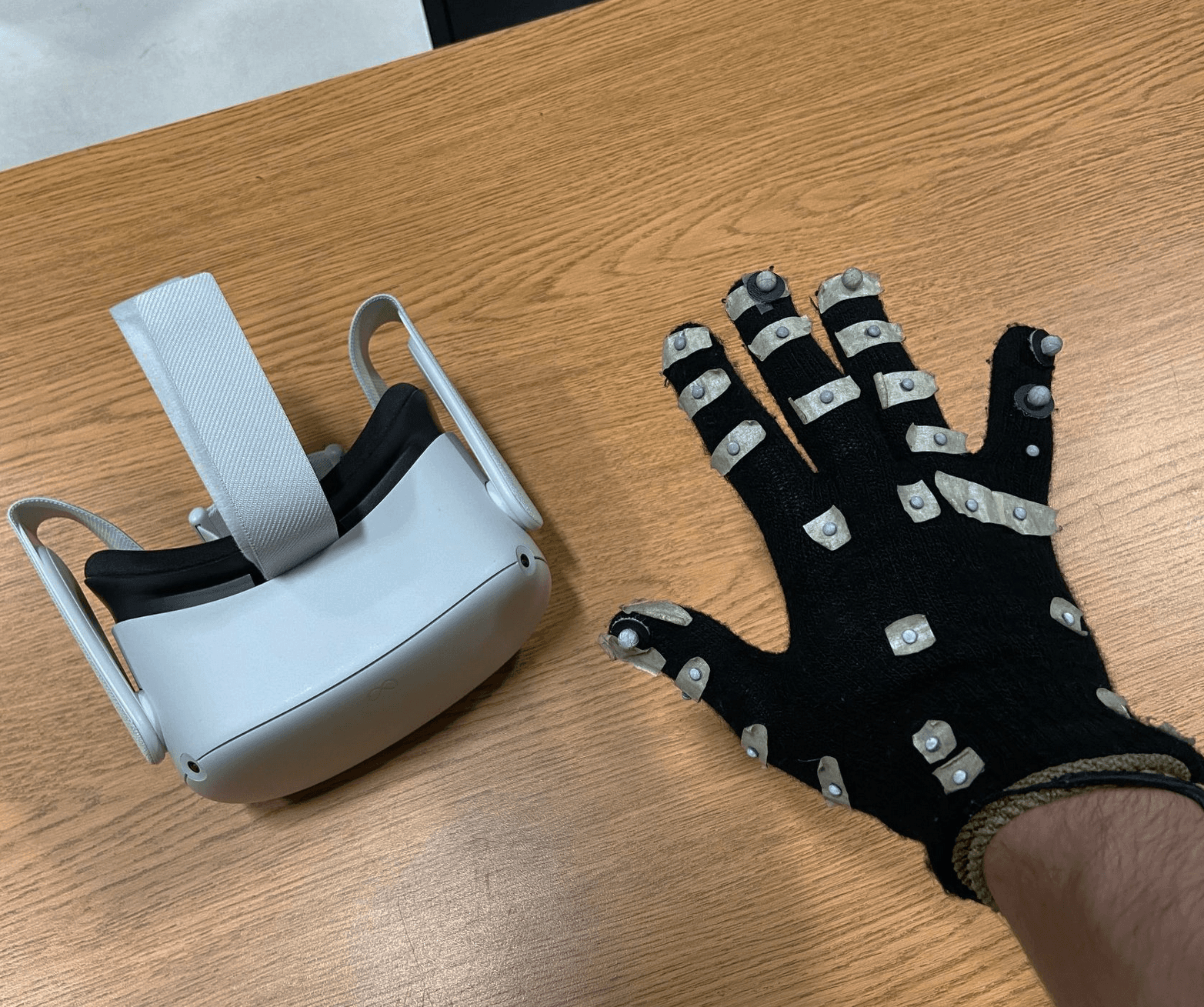

Headset and the custom Vicon tracking glove designed by my co-author.

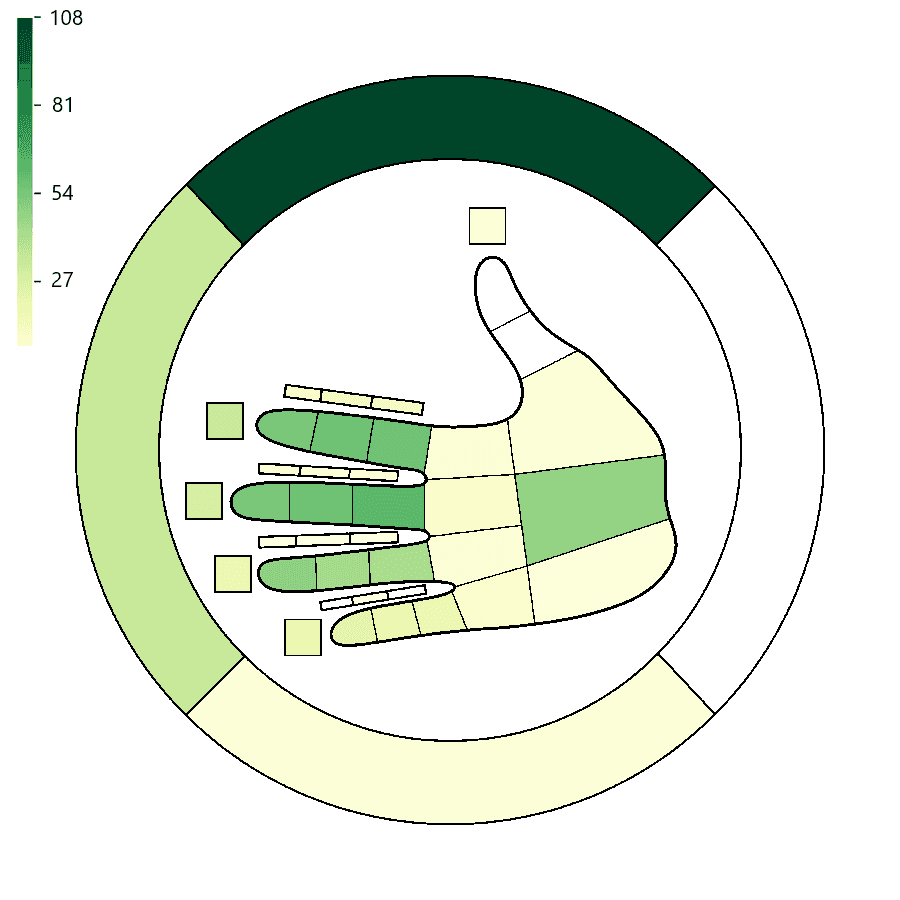

Custom python heatmap showing participants' element placements.

PROJECT

Hand Proximate User Interfaces

YEAR

2022-2023

DESCRIPTION

I implemented new Hand-Proximate User Interfaces (HPUIs), which are a novel in-situ mixed reality interaction modality first proposed and implemented by Faleel et al.. These new HPUI spanned four applications: A Media Player, Social Media, Maps, and Home Menu. Each featured multiple layouts and a submenu to explore navigation, such as a playlist queue for media or a settings menu for home.

The system ran on an Meta Quest 2 HMD with a Unity-based application via Meta Quest Link, using Vicon motion tracking for millimiter precision hand and head tracking. This setup allowed for an interactive evaluation of HPUI designs, which were then tested by participants for evaluation in a participatory design study. To learn more about the study, please refer to the project publication link.

This project also involved the development of a custom Python heatmap generator to visualize the placement of elements by participants during testing. This was done via a combination of OpenCV for image processing, Pandas for data manipulation, and Matplotlib for heatmap generation. As an added bonus, this generator was built as an internal CLI tool, allowing for easy generation of heatmaps of any shape for future work.

ORGANIZATIONS

Okanagan Visualization and Interaction Lab

University of British Columbia

LINKS

TECH

© 2025 Francisco Perella-Holfeld